As usual, it is long time that I do not update my blog… my bad, I am always kind of bad in finding free time between projects and personal life (I am almost finishing an amazing Kvm to allow me to remote control any machine from the UEFI BIOS, but this is a huge story for another blog post). Today, after I have been asked multiple times, and after reading some analysis on internet (like this one from @_0xDeku), I would like to talk about an attack old as the early days of Windows… the rollback attack.

Back in the days, more than two years ago, I have received some weird bugs to take care of, regarding the unexpected possibility to replace the “securekernel.exe” binary with an older version in a fully updated Windows OS. My first thought was… of course, … did the person that opened the bug just discovered the “warm water” 😂?

The entire Code Integrity module and Windows image verification system were never invented to support such-a-scenario. Everything that MM and CI (in all their implementations) do is to verify that the module being loaded has the proper digital signature, and is signed with the proper verified timestamp… it does almost nothing to verify that the current version is below the latest available or ever run.

So, after a brief discussion with some coworkers, I closed the bugs as “by design” without too much of thinking, since fixing the bug was requiring an entire re-implementation of the way by which Windows was loading every boot and runtime image (and not only the Secure kernel or modules belonging to the virtualization solutions)…

…every problem comes from trust…

But let’s step back for a moment and let’s discuss why preventing rollback in a secure way is a difficult problem to solve. Let’s put ourselves for a moment in the skin of an attacker that wants to exploit a bug in an old version of… let’s say… the Hypervisor:

- Good starting point is to replace a original Microsoft-signed hvxx64.exe (where, as you know, the first “x” depends on the platform architecture) with an older version that has the bug, and hope for the best to not break the thin layer of binary compatibility between the Hypervisor, Secure Kernel and regular NT kernel (as a note I originally though that this layer was waaay more sensible, but I was wrong)

- At this point, let’s assume that a minimum version list exists, in a form of a file, a registry key or whatever…

- At this point what the attacker would do? Easy, he/she would replace also the “minimum version list” with an older one.

- This can be done also if the HV loader will refuse to load the Hypervisor binary due to a version mismatch: he/she can replace also the OS loader binary.

- As you can imagine, this behavior can be repeated in a chain, until the attacker is in a position to replace everything in the chain which verifies the version of the target “leaf” module.

- Even worse, assume for a moment that the version of the “minimum version list” file is hard-coded into the OS loader, the attacker will replace the OS loader itself.

More than one year ago, I was officially asked to resolve this issue, and I started to scratch my head, with the goal to find a solution for it… After many meeting and talking with other teams, … What did we end up with?

What is a good “root” of trust?

So, as the reader can see, the problem can be solved by defining what is the “root” of trust, an entity that can not be replaced or spoofed and that will provide the initial verification for the next stage of truth. Windows already supports an entity like that, and MS also made it mandatory for Windows 11: the TPM. Readers can ask: why the TPM can not be spoofed?

The answer is simple: because it uses asymmetric cryptography to produce and sign an “attestation report” which contain a “TCG log” containing all the measurements of code (and data) that the firmware and the boot / OS loader executed (remember: a measurement is an hash – usually SHA256 – of a particular data or code. Measurements can only be extended, never deleted or reset. For this and other TPM concepts, I will let the reader to take a look at the great “A Practical Guide to TPM 2” book, which is even free in its PDF edition).

How the signing process using asymmetric encryption works is described in my Windows Internals book (look at the section about Secure boot in Chapter 12), but is not the only part of the motivation. The final part of this (long) story is that the TCG log (and thus everything measured by the TPM) is signed with the so called Attestation Identity Key (AIK, to be specific the private part of it) which is generated in hardware by the TPM. Trust in the AIK is established through a process (I do not even know all the details to be honest, it is signed or something) that transfers trust from the AIK to the root Endorsement Key (or EK). The EK is a unique, non-migratable asymmetric key embedded (or “fused”) into the TPM during manufacturing. Note that attackers can not extract or forge the EK, hence the root of trust is intact.

Putting together the pieces of the puzzles – WDAC policies

After having identified the “root of trust” I should have chosen a technology able to express all the requisites for a “minimum version list”. While designing complex solutions, it is always a great idea to start with something that already exist, and see if it can be adopted for the new requisites. After a couple of weeks used to deal with the requisites, my amazing colleagues in the Code Integrity team shows me the “WDAC policies”, also known as Windows Defender Application Control policies.

Readers can find all the specs here: Application Control for Windows | Microsoft Learn. Without going into too much details, a WDAC policy is a XML file with descriptors specifying whether to block or allow the loading of a image based on various rules. Customers can create their own policies and feed them to Code Integrity (CI) using CiTool or Powershell. At the end of the day, the new policy will be stored in the Windows system directory (“\System32\CodeIntegrity\CIPolicies\Active”) or in the EFI system partition (“\EFI\Microsoft\Boot\CiPolicies\Active”). All the policies are consumed by the Boot manager or OS loader (in the former case, boot applications can also be blocked).

This blog post does not want to cover all the aspects of the WDAC policies, but just provide to the reader a simple overview.

A WDAC policy XML file is composed of the following sections:

- The “

Rules” section describes the global policy control options, like Audit mode, WHQL requirements ans so on (described here). The section is mandatory - The “

FileRules” section describes a list of files (and their characteristics) to where the rules should be apply to. File rule attributes are described here. As the reader can see, a WDAC policy can identify a file via a lot of ways: FileName, Hash, Path, Signer and so on… - The “

Signers” and “EKUs” sections describes the digital certificate used to sign a particular file. Signers and files are usually connected via the “FileAttribRef” attribute (this is important since this link apply the signature search to the correct files) - The “

SigningScenarios” is the most important one and describes each class of rule to apply.

There is a lot of documentation available on the web, thus interested readers can consult it. I found a pretty good documentation, written by an external individual contributor (@CyberCakeX), available here: https://github.com/HotCakeX/Harden-Windows-Security/wiki/Introduction.

So, we created an individual WDAC policy file, called VbsSiPolicy (and present in your insider release system under “\Windows\System32\CodeIntegrity”) which contains all the minimum version of all the modules located into the TCB (I am speaking about OS loader, Boot drivers, HV and VBS modules). From now, in this blog, I will call this WDAC policy as “Anti-rollback” policy.

The Boot library loads the initial policies located in the EFI system volume very early in the boot process (see BlSIPolicyCheckPolicyOnDevice for those who are interested). After the boot volume (which may be Bitlocker encrypted) is unlocked and the execution control is transferred to the OS loader, the VBS policy file is loaded, parsed and activated. From now on all the TCB modules need to pass a minimum version check…

…but… so, what? How does the OS defend against bad attackers?

If the reader asked this question it means that she/he is on the right track: an attacker can replace the policy file itself with an old one, or replace Winload too. How to deal with this?

Here is how:

- The OS loader, after it has calculates the VBS policy (described in the Windows Internals book, not to be confused with the Anti-rollback policy), checks whether the anti-rollback policy has been correctly loaded by the OS loader (every policy is identified by a GUID), and, if not, or if the version of the policy is less than the hardcoded one, it immediately crash the system

- All the SI policies (included the anti-rollback one) are always measured by the TPM (in PCR 13). This means that remote parties, through attestation, can verify whether the anti-rollback policy is present on the system.

- The main idea is that the anti-rollback policy version and hash will be tied to the PCRs used to seal the VSM master key. Every time an update is delivered to the target system, the VSM master key ring will be predictively re-sealed using the new anti-rollback policy version.

As informed readers know, the main entities of a system that need to be protected are the secrets that it stores. All the secrets in Windows OS are stored in VSM. Hence, via the scheme above, it is still true that an attacker can replace the OS loader and the anti-rollback policy (and potentially the system will survive), but, in that case, all the secrets are irreparably gone, achieving the protection that we desire (talking about Windows Hello or all the secrets stored in VTL 1 is behind the scope of this blog post).

Useless to say that the schema above is extremely simplified, since there are a lot of problems that I have not even mentioned, like:

- The TPM is mapped in the NT kernel via classical MMIO, which means that attackers can act like “men in the middle” between the communication from the Secure Kernel to the TPM.

- Updating the Anti-rollback policy after an update means that people can not uninstall the update (unless loosing all the secrets stored in the machine), and this is not acceptable, since Windows runs in millions of different system, and sometime it happens that the update screw something.

- Some updates are delivered with Hotpatches (you can read about the NT hotpatch engine here, admiring my incredible terrible Excel drawing skills 😊), which are binaries that can not live without the old base image.

So, long story short, solving correctly this problem is far away to be easy!

How to debug this black magic?

Part of what I discussed here is already implemented and distributed in Windows. I downloaded and installed the public Windows Insider release build 27695 from the official website (which, at the time of this writing is already old), and did some investigation…

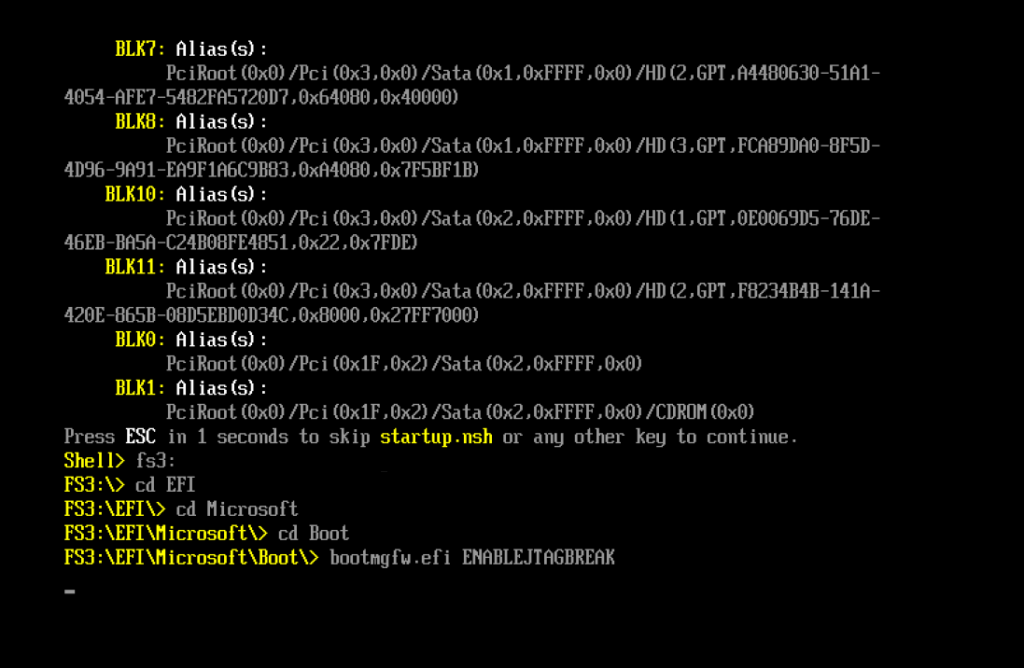

First, useless to say again that initial legacy WDAC policy are processed very early in the boot process, before every kind of debugger connect. So how to debug that? Easy, using JTAG (Exdi) or QEMU. Speaking of them, SourcePoint does a good job in stepping through the initial BootMgr code. The strategy is to exploit a UEFI shell and the JTAG break that Alexis and I implemented long time ago. A picture is better than any explanation:

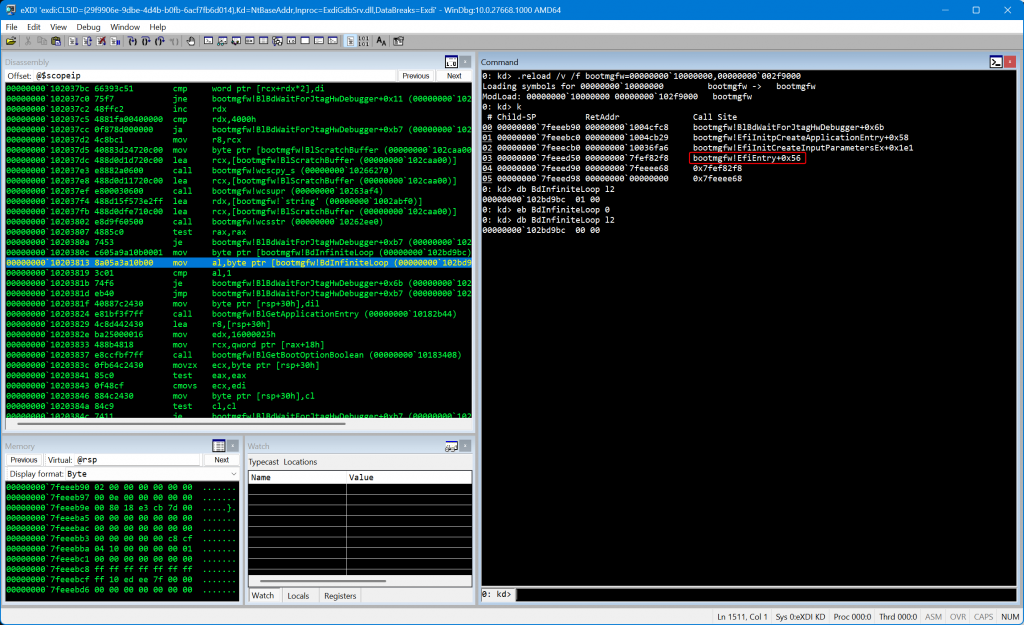

If you do the same and then you break into the JTAG debugger you will realize that the code execution is stuck at a very initial stage of the EfiMain entrypoint of the Boot manager:

From there, you just need to set the byte addressed by the BdInfiniteLoop symbol to 0 and set your breakpoints. Reverse engineering the Bootmgfw binary with the symbols is trivial and you can find references to the term “SiPolicy” there and in the OS loader using IDA (spoiler: a good starting point is the BlSIPolicyPackagePolicyFiles routine).

Note that a lot of Code integrity code works only when Secure Boot is on. I have been able to install customized Secure boot keys in my QEMU virtual machine, and realized that in the AAEON board keys are already loaded by default, which mean that you can debug it (using the JTAG EXDI interface connected to SourcePoint) with Secure boot ON and witness also other WDAC policies being applied (like Secure Boot policies, which prevents the enablements of classical kernel debuggers).

I think that this is all for now folks! It is clear that I expect Yarden and some other Internals people to dig deeper and explain what I missed here 😊.

See you in the next blog post!

AaLl86

REFERENCES

- TPM as root of trust: https://gufranmirza.com/blogs/trusted-platform-module-and-attestation

- Installing customized Secure Boot keys: https://projectacrn.github.io/latest/tutorials/waag-secure-boot.html

- A Practical Guide to TPM 2 (great book on the TPM): https://library.oapen.org/handle/20.500.12657/28157

- Windows Internals 7th Edition Part 2: https://www.microsoftpressstore.com/store/windows-internals-part-2-9780135462331